Machine Encounters: Introduction to “Whose Bias”

Background information

“Whose bias? Demystifying human and machine interactions in Machine Learning” is an interactive event organised by the Digital Humanities Research Hub (School of Advanced Study, University of London) and Cambridge Digital Humanities (University of Cambridge) inviting participants to consider what is bias in the context of AI systems and where it comes from.

The organisational team was composed of Jonathan Blaney (Research Software Engineer, Cambridge Digital Humanities), Michael Donnay (Manager, Digital Humanities Research Hub, School of Advanced Study, University of London), Kunika Kono (Technical Lead, Digital Humanities Research Hub, School of Advanced Study), Niilante Ogunsola-Ribeiro (Learning Technologist, School of Advanced Study), Simon Parr (Learning Technologist, School of Advanced Study), Marty Steer (Computational Humanist), and Elena Zolotariov (Liaison Officer, Mapping the Arts and Humanities, School of Advanced Study).

So far, the event has taken place on two occasions, (Raspberry) Pi Day (14 March 2025) and during the “Born-Digital Collections, Archives and Memory” Conference (2-4 April 2025) – a total of seven one-hour sessions. The event was designed to engage a diverse range of audiences – from people interested in AI to Raspberry Pi. It strove to create a space for thinking about artificial intelligence and bias from a critical humanities perspective. While technical dimensions were foregrounded, through the activity’s entry and exit questionnaires, the participants were also asked to consider the sociocultural and ethical implications of machine learning.

“Whose Bias?” offered a low-barrier access point into the workings of AI and Machine Learning, which are still largely privy to technical experts. To shift the dynamic, it was essential to empower participants to step into the shoes of not only data scientists and machine learning engineers, but also the often-overlooked contributors — such as data annotators and other hidden labourers — and take charge of the process from start to finish.

The event mirrored the three stages in machine learning: data collection, organisation/classification, and model training/testing. Participants progressed through the stations to train an image classification model.

Instead of handling and selecting data on screens or in spreadsheets, participants handled toys and snacks as training data– physical objects they sorted on paper plates, labelled, and photographed to train their own image classification model. The activity was set up as a hands-on walkthrough of how machine learning systems are developed, with a table-based layout representing the key tasks.

Just curiosity, and no technical knowledge or coding skills were required to take part.

Mapping the Arts and Humanities Project and “Whose Bias?”

The Mapping the Arts and Humanities Project has a vested interest in understanding how participatory activities like “Whose Bias?” can engage audiences in thinking critically about society’s most pressing issues. After all, many humanities subjects (such as literary studies, history, philosophy, and cultural studies, to name a few) are concerned with interrogating power structures, challenging assumptions, and questioning ideas of cultural uniformity. This commitment to critical reflection takes shape in conversations about revising literary cannons, interpreting historical narratives, and understand whose voices are included — or excluded.

Consequently, conversations around training machine learning models resonate strongly with humanities-based approaches, as they raise important questions about which data is selected, what narratives are being perpetuated, what stories are being told, who makes those choices, and how representation is shaped and weighted.

In this context, we believe collaborative research infrastructures have a vital part to play in making technology access more equitable and open without losing sight of humanities-led methods. It is only by inviting participants from diverse backgrounds and varying experience – including those beyond traditional academic environments – that we can create pluralistic dialogues. Such conversations would ideally both interrogate set assumptions and unsettle established ways of thinking that may be taken for granted within the humanities. By widening access and by hybridising our methodologies (i.e. blending humanist and computational approaches), new lines of inquiry can be created, transgressing man-made chasms between the humanities and technology.

That’s why we’re delighted to host and continue this urgent conversation on the MAHP blog. On one hand, we will provide data-driven evidence to show how initiatives of this kind can have a tangible impact in refashioning how we engage with emerging technologies, turning critical-thinkers into critical-doers and co-creators.

On the other hand, we will publish discussions on the essential role humanities play in helping us understand how AI is transforming society, how we – as users and consumers – interact with AI technologies, and how collaborative humanities research infrastructures are a critical instrument in making AI more inclusive, transparent, and ethical.

The philosophy behind “Whose Bias?”: Prioritising discovery over direction

What “Whose Bias?” set out to do

“Whose Bias?” was conceived from a simple question: “What if we explored bias in AI like software engineers do, and in an interactive, fun and creative way?”

Kono explains:

“In software engineering, understanding how a system works often comes from hands-on experience and direct interaction. We run simulations, experiment, and break and then debug and fix things. We poke, prod, and play. Instruction manuals and documentations explain how systems should work, but practical engagement can reveal quirks and limits in ways that manuals alone cannot.”

She adds, “in doing so, we often have to think like the system’s creators and users. This means setting aside what we think we know. This practice of seeing things from different perspectives helps us tease out built-in assumptions or bias. There are biases that are embedded by design to make systems work effectively, and there are unintended biases. The key is understanding what kind of bias is present, its context, and who it benefits or excludes.”

Drawing on the above, “Whose Bias?” aimed to explore the dynamics between human and machine interactivity by using supervised machine learning. It sought to reveal typically concealed or automated procedures as well as expose participants to the different points where bias can be introduced in machine learning. This offered participants both access and greater autonomy in investigating how machine learning works. Furthermore, the facilitators incorporated opportunities for critical reflection by including “pauses for thought.”

For technical simplicity, energy efficiency considerations, and replicability of the event, the organisers utilised Raspberry Pi hardware and Google’s Teachable Machine web application for image classification model training. By imposing hardware constraints, such as basic fixed-focus cameras, facilitators aimed to disrupt default expectations, nudging the participants to pause and question. Meanwhile, by strategically employing browser-based software, organisers planted the seeds for participants to query where their data was processed and stored as well as how their privacy was managed.

The structure of the activity was designed to mirror the machine learning process. Trial-and-error was used to navigate technical complexity. For example, participants trained prototype models using a limited dataset and then tested them with both objects that the model had been trained on as well as wildcards. When outputs were inaccurate, participants retrained their models to try and improve accuracy.

The retraining process revealed several things. First, participants’ discussions often centred around how their own data choices had inadvertently introduced bias – noticeably altering their views regarding the causes of AI bias (as we will shortly see). Second, by repeatedly retraining their models, participants began to rigorously investigate why the model behaved as it did. This led them to formulate and test their hypotheses about what and how the model was learning from the data. Moreover, participants noticed the differences between what they and the learning algorithm were respectively “seeing”.

“Though we were thinking along these terms,” Kono expands, “we certainly weren’t sure how well [this software engineering approach] would translate into a tangible event, but we quickly realised that applying an engineering practice when designing learning programs can help people generally – it’s not just for techies.”

Adopting an open, exploratory approach, the event was guided by a set of open-ended objectives:

- Improve accessibility – by inviting participation without requiring technical expertise, and lowering barriers that often discourage engagement with the technical side of AI and ML.

- Offer context – by helping attendees explore real-world concerns about bias in machine learning in a simple, interactive activity.

- Encourage reflection – by prompting participants to ask their own questions and think about how AI bias connects to their lives, work, and communities.

Behind the scenes: naming the different stages of machine learning, choosing a dataset, ironing out the details

Drawing on playful, cross-disciplinary cues, the organisers hoped to create an environment that was unfamiliar without being alienating. While the aim was partly to defamiliarise the machine learning process and leave space for participants to explore it without predefined expectations, it also offered a more versatile way into bias in AI.

When it came to naming each step (what might conventionally be called “data collection,” “data classification,” and “model training & testing”) the team made a deliberate choice to break away from technical jargon. Kono comments:

“There wasn’t any kind of deep thinking about the names – we just said, let’s name these stations in different ways. We wanted to make it fun, to take people out of their norm as much as possible. If we had called the stations things like ‘data collection,’ ‘data organisation,’ or ‘machine learning,’ it would have immediately triggered preconceived ideas. That’s why the dataset was made up of toys and snacks.”

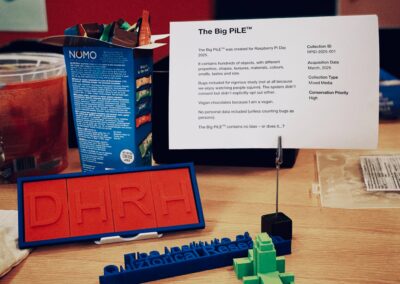

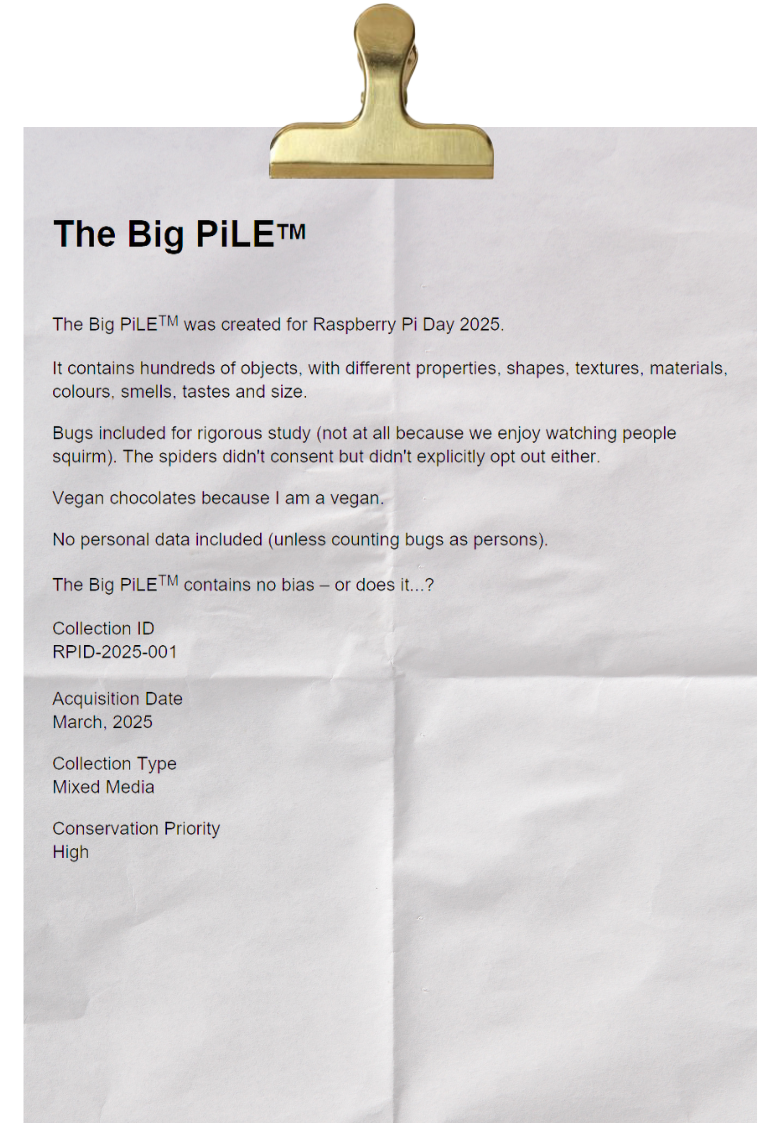

By inventing playful names for each stage, such as The Big PiLE™, The Great Sorting™, and The Training Ritual™, “Whose Bias?” introduced a layer of gamification. These titles deliberately sidestepped the “baggage” of technical terminology, which often narrows the frame of understanding and presumes a single “correct” way to perform a task.

By inventing playful names for each stage, such as The Big PiLE™, The Great Sorting™, and The Training Ritual™, “Whose Bias?” introduced a layer of gamification. These titles deliberately sidestepped the “baggage” of technical terminology, which often narrows the frame of understanding and presumes a single “correct” way to perform a task.

The playful tone extended to the instructional materials.

Looking back on the event, Marty Steer reflects that this playful language and use of everyday objects was not necessarily planned with learning theory in mind, but that the approach closely aligns with ideas from schema theory. Explaining that removing familiar terms helped dislodge participants’ existing assumptions about AI, Steer adds:

“When something doesn’t directly map onto a familiar mental model, it helps you build a new one.”

Results of entry questionnaire (Q1) across the two events

What “Whose Bias?” (unintentionally) ended up doing

While the organisers deliberately avoided pre-scripted learning objectives, clear and measurable shifts were noticed in participants’ understanding, confidence, and ways of thinking about machine learning and AI.

A growing body of research suggests that rigid learning objectives can limit learning by pre-determining outcomes. In early education, moving beyond learning objectives and giving students more control has been shown to lead to “different kinds of unexpected learning” (Swann et al., 2012). In further education, scholars have argued that objectives may restrict curiosity and discourage divergent thinking – privileging the “right” answer over coming up with unique, creative solutions to a problem (Brockway, 2016).

Taking the above into consideration, the “Whose Bias?” seems to have accidentally ended up emulating an inquiry-based, constructivist pedagogical model. Inquiry-based learning (IBL) has been shown to promote “critical thinking, creativity, and active engagement” while constructivist pedagogy prises experience over rote instruction (Sam, 2006).

The physical/tactile elements of the event, furthermore, unintentionally align closely with grounded cognition theory which posits that physical interactions with tangible devices can enhance understanding by bridging digital concepts and real-world sensory experiences, thus “humanising interaction between computers and individuals” (Kallia, 2022).

Placing emphasis on doing, particularly learning through active interaction, allowed participants to encounter AI bias experientially and to engage with a machine learning algorithm. Rather than being guided toward pre-scripted conclusions, participants discovered how human decisions shape technical systems through playful and physical engagement.

Learning technologists Niilante Ogunsola-Ribeiro and Simon Parr remarked:

“The use of toys was a real innovation. It allowed participants to focus on the decision-making process and gain clarity on fundamentals of how the systems work rather than being overwhelmed by the data and code.”

Developing a relational understanding of AI and bias: a preliminary interpretation of the survey data

Before the activity, around 78% of participants described themselves as having “a little” understanding of AI, while only 22% felt they had “a lot.” By the end, 76% of participants agreed or strongly agreed that they now had a better grasp of how AI works. Further, in the exit survey, 81% reported increase in their confidence in discussing AI bias, suggesting that even a short, hands-on encounter can help demystify seemingly complex concepts.

In the “Entry Questionnaire,” participants were also asked: “What do you think causes AI models to make unfair or wrong decisions?” The question was designed to sample common assumptions and prior knowledge, inviting participants to speculate on causes of bias in AI generally (multiple select, 96 responses). 30% attributed bias to data, 28% pointed to algorithms, and 24% to human input. These results indicate a relatively even distribution of views, with a slight lean toward technical causes (data and algorithm) over human input. The close split between the source of bias may be reflective of a lack of clear understanding about how data, algorithm, and human input interact in the creation of bias.

In the “Exit Questionnaire,” the wording shifted: “If your AI model made a decision that you thought was unfair or wrong, what would you think caused it?” Unlike the more general framing of the earlier question, this aimed to prompt participants to reflect on their own experience with AI and machine learning in a relational way. It encouraged them to consider their role in shaping predictions. On this occasion, data was most frequently identified as the main cause of bias (44%), followed by human input (29%), while algorithm attribution dropped to 14%.

Notably, the question in the “Exit Questionnaire” received 70 responses (compared to 96 in the “Entry Questionnaire), possibly hinting at a more selective approach. For example, participants selecting less choices on average suggests greater confidence. The increased emphasis on data, and the marked reduction in identifying the algorithm as a potential source of bias, can also indicate that after engaging with the machine learning process, participants’ became more aware to the concrete aspects (data selection, classification, and training the model) that are often overshadowed by bigger concepts such as black-box.

Results of exit questionnaire (Q2) across the two events

A walkthrough of the activity

As mentioned previously, the layout of the “Whose Bias?” exhibition was modelled on three key stages of a machine learning process: data collection and organisation to model training and testing. Participants moved through the stages in a sequence with the option to go back to any of the stages anytime they felt needed.

Each table or station introduced an essential step in the process, motivating participants to interact with physical objects, define classification labels, and observe how their decisions influenced the model’s learning.

Marty Steer noted:

“Our interactive approach allowed participants to experience building an AI model firsthand through data selection, labelling and training. The collective nature of the workshop reflected how real AI systems are built through human collaboration.”

Alongside the physical walkthrough, each part of the activity invited participants to pause and consider their own role in shaping the AI model. For instance, at every table a series of “pause for thought” questions was provided to encourage participants to think about what they were doing:

|

Questions during data collection

|

Questions during data classification 1. What makes each of your categories unique? 2. What is your thinking behind the choice of your category labels? 3. Do the category labels have specific meaning to you, or are they generally understood? |

Questions during model training and testing

4. How re-usable is your model? |

Raspberry Pi Day

The experience began with an entry survey and short induction slideshow. From there, participants moved through three interactive zones.

- At the first station, participants created their dataset by selecting objects from The Big PiLE™ which consisted of toys and snacks.

- At the second station, The Great Sorting™, participants used paper plates and pens to organise their dataset into distinct classes based on features they wanted their model to learn (for example: colour, shape, number of legs, or even texture).

- At the third and final station, The Training Ritual™, participants trained their models. They captured images with Raspberry Pi cameras, trained an image classification model, and tested the model’s responses to both “seen” and “unseen” objects to assess the model’s effectiveness in recognising patterns beyond its training data.

The walkthrough ended with an exit survey; some participants stayed to continue discussions amongst themselves or with facilitators, to explore the posters of the event and a word cloud of classification labels created by participants which were display on a large screen.

Jonathan Blaney commented:

“It was striking how many people immediately became committed to their classification systems, but with an underlying irony. They knew their classifications were essentially arbitrary but cleaved to them anyway. They were both sophisticated thinkers and dogmatic classifiers.”

“Born Digital Collections, Archives and Memory” Conference

At the Born-Digital Conference, the set-up largely remained the same, but a few important adjustments were made.

At the Born-Digital Conference, the set-up largely remained the same, but a few important adjustments were made.

- The entry survey was completed beforehand, outside of the room, to ensure timely start of each session.

- Participants were encouraged to work in pairs to allow more peer-to-peer exchange. This format supported a more collaborative and self-directed approach to the activity.

- A navigational map was displayed on the large screen to guide participants through the machine learning process.

- Finally, a post-session reflection zone with seating was added. The participants could pin their plates to a board while also allowing them to stay on and continue discussions.

Steer and Blaney further reflected:

“What’s fascinating about our approach is how the workshop itself became a learning system. The adjustments between each event weren’t just logistical. By responding to participant needs with thoughtful changes, we created a more meaningful space for critical thinking about human involvement in AI.”

The conversation continues…

As the first in a short series of blog posts, this introduction aimed to sketch the thinking behind “Whose Bias?”, outline how the event was designed, and present the experience of walking through the machine learning process. We’re grateful to everyone who took part, contributed ideas, or helped shape the event in real time. We’re especially thankful to the participants for their openness, curiosity, and thoughtful engagement with the machine learning process.

Over the coming months, we’ll be sharing further reflections and results that we hope you find informative. We’ll look at how different audiences engaged with the processes and what we can learn from their responses about designing more inclusive and engaging activities that are responsive rather than one-size-fits-all. Most significantly, we’ll discuss the role of collaborative, humanities-led spaces in helping people navigate the technologies shaping our world.

We’re excited to keep exploring these questions together in the months ahead.

Works cited

Brockway, Dominic. “When Lesson Objectives Limit Learning.” School Leadership Today 7, no. 4 (October 3, 2016).

Kallia, Maria, and Quintin and Cutts. “Conceptual Development in Early-Years Computing Education: A Grounded Cognition and Action Based Conceptual Framework.” Computer Science Education 33, no. 4 (October 2, 2023): 485–511. https://doi.org/10.1080/08993408.2022.2140527.

Mandy, Swann, Peacock Alison, and Drummond Jane Mary. Creating Learning Without Limits. McGraw-Hill Education (UK), 2012.

Sam, Ramaila. “Systematic Review of Inquiry-Based Learning: Assessing Impact and Best Practices in Education.” F1000Research 13 (September 11, 2024): 1045. https://doi.org/10.12688/f1000research.155367.1.

Elena Zolotariov is the Mapping the Arts and Humanities Liaison Officer at the School of Advanced Study, University of London.

Jonathan Blaney is the Digital Humanities Research Software Engineer at Cambridge Digital Humanities (CDH).

Kunika Kono is the Technical Lead at the Digitah Humanities Research Hub (DHRH), School of Advanced Study, University of London.

Niilante Ogunsola-Ribeiro is a Learning Technologist at the School of Advanced Study, University of London.

Simon Parr is a Learning Technologist at the School of Advanced Study, University of London.

Marty Steer is a Computational Humanist.

Introducing LHub’s Law and the Humanities Map

This blog post shares key findings from recent focus groups on LHub’s Law and the Humanities Map....

LHub’s Law and the Humanities Map – FAQ

This FAQ is here to help you get the most out of the Law and the Humanities Map. It covers...

Mapping a Changing Humanities Sector: Towards New Practices and Shared Futures

The Mapping the Arts and Humanities dataset is growing by the day and with it, our understanding...

Launching Soon: Law and the Humanities Infrastructure Map

LHub and MAHP are launching a new open-access map dedicated to Law and Humanities research...

Part 1 | Mapping smarter: how to make your entry count

Learn how to add your infrastructure to the Mapping the Arts and Humanities platform. This tutorial covers signing up, writing a strong description, using tags, and making your entry visible and impactful.

Behind the Map: A Step-by-Step Guide to the Dataset

The Mapping the Arts and Humanities team has launched a new blog series, Behind the Map, to help users navigate the MAHP dataset. Over the next few weeks, the team will share practical guidance on completing your entry, understanding data fields, and exploring the dataset using digital humanities methods.

Learned Societies: English Association

The Mapping the Arts and Humanities project is bringing learned societies together, fostering collaboration, and amplifying the vital role of arts and humanities research. The English Association, alongside its partners, is working to support researchers, advocate for the subject, and champion the power of interdisciplinary connections in an evolving landscape. Elizabeth Fisher, Elizabeth Draper, and Jenny Richards share their vision and expertise in the area.

What is humanities research infrastructure?

Mapping the Arts and Humanities, a major project commissioned by the AHRC and Research England, is...

How do we capture the diversity of our research infrastructure?

Professor Jane Winters, Director of the Digital Humanities Research Hub at the School of Advanced Study, explores the challenges of identifying, classifying and quantifying our arts and humanities research landscape.

Infrastructure and innovation

💡As the UK’s arts and humanities infrastructure is mapped for the first time; Dr Jaideep Gupte considers how it will help to strengthen the UK’s global standing in the sector.

Tags

Join our mailing list

Receive blogs as soon as they’re published, along with project updates and event information, plus special access to digital tools to help you make the most of the dataset.